Qr Householder Method

$initialize$So far, we have only shown existence , but How do we compute QR decompositions?

Householder Reflections

Householder reflection is $H=I-2vv'$ where $norm v2=1$ which reflects over hyperplane orthogonal to $v$

Lemma. Householder reflections are orthogonal matrices

Lecture 3: QR-Factorization This lecture introduces the Gram–Schmidt orthonormalization process and the associated QR-factorization of matrices. It also outlines some applications of this factorization. This corresponds to section 2.6 of the textbook. In addition, supplementary information on other algorithms used to produce QR-factorizations. Householder transformation and QR decomposition A Householder transformation of a vector is its reflection with respect a plane (or hyperplane) through the origin represented by its normal vector of unit length, which can be found as where is the projection of onto. In general, the projection of onto any vector is. This paper) that the Gaussian-elimination and Householder methods for upper-triangularization are on the order of n3. Thus, these methods are far more efficient than naive cofactor expansion. 1.3 Computation of matrix inverses In elementary linear algebra, we are taught to compute inverses using cofactor expansion. Householder transformation and QR decomposition A Householder transformation of a vector is its reflection with respect a plane (or hyperplane) through the origin represented by its normal vector of unit length, which can be found as where is the projection of onto. In general, the projection of onto any vector is.

Proof. $~$ HOMEWORK

We use a series of Householder reflections to reduce $APi$ to an upper triangular matrix, and the resultant product of Householder matrices gives us $Q,$, i.e. $$underbrace{H_rcdots H_1}_{Q'}APi=R$$

First, find $H_1$ that makes every entry below $R_{11}$ zero,

begin{align*}

H_1a_1&=R_{11}e_1

R_{11}e_1&=a_1-2v_1v_1'a_1

v_1(underbrace{2v_1'a_1}_text{scalar})&=a_1-R_{11}e_1

implies~v_1&=frac{a_1-R_{11}e_1}{norm{a_1-R_{11}e_1}2}

implies~R_{11}e_1&=pmnorm{a_1}2

v_1&=frac{a_1-norm{a_1}{}e_1}{norm{vphantom{big };!a_1-norm{a_1}{}e_1}2}

implies H_1&=I-frac{(a_1-norm{a_1}{}e_1)(a_1-norm{a_1}{}e_1)'}{norm{vphantom{big };!a_1-norm{a_1}{}e_1}{}_2^2}

end{align*}

Then, we have

$$H_aAPi~=~defhmatres{{begin{matrix}begin{matrix}R_{11}&text{--------------}~~end{matrix}begin{matrix}begin{matrix}0vdots0&end{matrix}&!!!!!!mat{&&&tilde{A}_2&&&}end{matrix}end{matrix}}}left[{begin{matrix}begin{matrix}R_{11}&text{--------------}~~end{matrix}begin{matrix}begin{matrix}0vdots0&end{matrix}&!!!!!!underbrace{mat{&&&tilde{A}_2&&&}}_text{repeat on these}end{matrix}end{matrix}}^{vphantom{Big }}right]$$

Givens Rotations

Givens rotations $Gij$ where $Gij$ is the identity matrix except

- $Gij_{ii}=Gij_{jj}=lambda$

- $Gij_{ij}=sigma$

- $Gij_{ji}=-sigma$

$$text{for example, },G^{(2,4)}=mat{1&0&0&00&lambda&0&sigma0&0&1&00&-sigma&0&lambda}$$

HOMEWORK $~$ Show that Givens rotation is orthogonal when $sigma^2+lambda^2=1$

We can also use Givens rotations to compute the decomposition, so that $$underbrace{G_Tcdots G_1}_{Q'}APi=R$$

To find $G_1$, we look for a Givens rotation $G^{(1,2)}$ that will make the entry in row 2 column 1 of the product equal to zero. Consider the product with the following matrix $M$ $$mat{lambda&sigma-sigma&lambda},mat{M_{11}&M_{12}&cdots&M_{1m}M_{21}&M_{22}&cdots&M_{2m}}~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~=mat{hphantom{-}lambda M_{11}+sigma M_{21}&hphantom{-1}lambda M_{12}+sigma M_{22}&cdots&hphantom{-1}lambda M_{1m}+sigma M_{2m}-sigma M_{11}+lambda M_{21}&-sigma M_{12}+lambda M_{22}&cdots&-sigma M_{1m}+lambda M_{2m}}$$

To find $G_1$, we solve $$begin{matrix}begin{split}-sigma M_{11}+lambda M_{21}&=0text{s.t. }~~~~~~~~~~~~~sigma^2+lambda^2&=1end{split}end{matrix}~~~~~~~implies~~~~~~~begin{matrix}lambda=frac{M_{11}}{sqrt{M_{11}^2+M_{21}^2}}~~~text{and}~~~sigma=frac{M_{21}}{sqrt{M_{11}^2+M_{21}^2}}end{matrix}$$

Now, we can repeat this process to successively make all entries below the diagonal zero, giving us a QR decomposition.

HOMEWORK

- Determine the computational complexity for QR decomposition using

- Gram-Schmidt

- Modified Gram-Schmidt

- Householder reflections

- Givens rotations

- Compare the complexity of Householder vs Givens for a sparse matrix

- Implement QR decomposition using Householder reflections, (input matrix A of full column rank and output Q,R)

- Repeat 3 using Givens rotations

$$~$$

'Large' data least squares

$AinRnm$, where $ngg m~~$ (which is a different situation than $mgg n$)

Optional reference : 'Incremental QR Decomposition' , by Gentleman.

We can apply QR decomposition to solving the least squares equation $Ax=b$ since

begin{align*}

hat{x}&=(A'A)inv A'b

&=((QR)'(QR))inv(QR)'b

&=(R'cancel{Q'Q}R)inv R'Q'b

&=(R'R)inv R'Q'b

&=Rinvcancel{(R')inv R'}Q'b

&=Rinv Q'b

end{align*}

We need to find $Rinv$ and $Q'b$. To do so, consider just the first $m!+!1$ rows of the matrix $mat{A&b},$ and perform QR decomposition to get $$mat{tilde{R}&tilde{Q}'tilde{b}0&tilde{s}}$$ Then, we add one row at a time to the bottom and perform Givens rotations so that $$mat{tilde{R}&tilde{Q}'tilde{b}0&tilde{s}a_{m+2}&b_{m+2}}~~~implies~~~mat{tilde{R}_{m+2}&tilde{Q}_{m+2}'tilde{b}_{m+2}0&tilde{s}_{m+2}0&0}$$

$$~$$

Next:The Schur Decomposition and Up:ch1 Previous:Householder transformation

Next:The Schur Decomposition and Up:ch1 Previous:Householder transformationQR Decomposition

The QR decomposition (or factorization)is an algorithm that converts a given matrix into a product of an orthogonal matrix and a rightor upper triangular matrix with . In the following we consider two methods for the QR decomposition.

- The Householder transformation:

We first construct a Householder matrix based on the first column vector of , by which will be converted into a vector with its last elements equal to zero:

We then construct second transformation matrixwhere is an dimensional Householder matrix to eliminate the last elements of the first column of :(47)

This process is repeated with the general kth transformation matrixwhere is an dimensional Householder matrix to eliminate the last elements of the first column of the sub-matrix of the same dimension obtained from the previous steps. After such iterations becomes an upper triangular matrix:(49)

Define and pre-multiply it on both sides we get the QR decomposition of :where defined aobve is an orthogonal matrix:(51) To find the complexity of this algorithm, we note that the Householder transformation of a column vector is , which is needed for all columns of a submatrix in each of the iterations. Therefore the total complexity is . However, if is a Hessenberg matrix (or more specially a tridiagonal matrix), then the Householder transformation of each column vector is constant (for the only subdiagonal component), and the complexity becomes .

Note that this method cannot convert into a diagonal matrix, by performing Householder transformations on the rows (from the right) as well as on the columns (from the left), similar to the tridiagonalization of a symmetric matrix previously considered. This is because while post-multiplying a Householder matrix to convert a row, the column already converted by pre-multiplying a Householder matrix will be changed again.

- The Gram-Schmidt process:

The QR decomposition of can also be obtained by converting the column vectors in , assumed to be independent, into a set of orthonormal vectors , which form the columns of the orthogonal matrix .

We first find as the normalized version of the first column of , and then find each of the remaining orthogonal vector (), with all previous already normalized:

followed by normalization so that . Now can be represented as a linear combination of vectors as:(53)

We define and , and rewrite the above aswhere is the ith column of , and are yet to be generated (but they are multiplied by zero and play no role here). Combining the equations above in matrix form, we get(55)

where is orthogonal and is upper triangular.The complexity for computing and is , and the total complexity of this algorithm is .

The QR decomposition finds many applications, such as solving a linear system :

| (57) |

where can be obtained as:

Qr Householder Method Meaning

and then can be obtained by solving with an upper triangular coefficient matrix by back-substitution.More importantly, the QR decomposition is the essential part of the QR algorithm for solving the eigenvalue problem of a general matrix, to be considered in the following section.

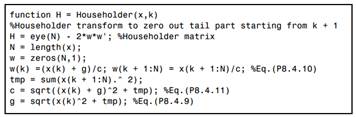

The Matlab code listed below carries out the QR decomposition by both the Householder transformation and the Gram-Schmidt method:

Next:The Schur Decomposition and Up:ch1